Inside ElevenLabs’ $3.3B AI Unicorn

Founded in 2022 by childhood friends Mati Staniszewski (a former Palantir strategist) and Piotr Dąbkowski (an ex-Google machine-learning engineer). In just two years, the company has emerged as a category-defining player in synthetic speech, developing deep-learning models that generate ultra-realistic voices and speech in over 30 languages. ElevenLabs’ core mission is to make all content universally accessible in any voice, language, and sound – essentially becoming the “OpenAI for audio”. Its platform allows users to clone voices from short samples and create lifelike speech with rich emotion and intonation, addressing use cases from audiobooks and film dubbing to gaming, education, and accessibility. The company has experienced explosive growth, surpassing $90 million in annual recurring revenue by late 2024 (up ~260 % year-over-year), and achieving profitability while serving a customer base that spans 60 % of Fortune 500 companies. Investors have taken note: ElevenLabs raised a total of $281 million across four funding rounds, including a Series C in January 2025 that valued the firm at $3.3 billion post-money.

This report provides a 360° analysis of ElevenLabs – from its origins and technology stack to its financials, go-to-market strategy, competitive landscape, and future outlook – drawing on primary sources (company statements, press releases, investor materials) and expert commentary. Key findings include: the company’s compelling founding story and research-driven culture; its differentiated voice-AI platform and product roadmap (e.g., multi-language dubbing, voice-cloning marketplace); a detailed funding history and ownership breakdown; strong growth in revenue and enterprise adoption alongside proactive measures to mitigate deepfake misuse; and an assessment of strategic opportunities and risks as voice AI becomes an increasingly important interface in 2025–2027. Investors and analysts view ElevenLabs as a potential cornerstone of the emerging generative-audio ecosystem, while also cautioning that regulatory developments and competition from tech giants are critical factors to monitor. The following sections elaborate on each dimension of ElevenLabs’ business and outlook, with full citations provided for all facts and figures.

Founding Story & Mission

Founders’ backgrounds and motivations: ElevenLabs was founded by two Polish-born entrepreneurs, Mati Staniszewski and Piotr Dąbkowski, who met as schoolmates and later reunited to tackle a shared passion. Staniszewski, now CEO, had been a deployment strategist at Palantir, while CTO Dąbkowski worked as a machine-learning engineer at Google’s DeepMind. Both founders brought complementary skills – business strategy and cutting-edge AI research – but more importantly, they shared a personal frustration that became the company’s inspiration. Growing up in Warsaw, they were accustomed to foreign films and TV shows being aired with a single monotonous voice-over in Polish, rather than proper dubbing by multiple actors. This one-voice-fits-all dubbing practice in Poland felt culturally limiting and “broke the immersion” of content. Staniszewski and Dąbkowski dreamed of a better solution: what if AI could instantly translate and reproduce any voice in any language, enabling movies, audiobooks and media to be experienced in their original voices across the world ? This vision of seamless multilingual dubbing – to “make sure film dubbing can be done in all languages, in the same voices as the original” – became the North Star for ElevenLabs’ founding in 2022.

Formation timeline: ElevenLabs formally started in April 2022, when the founders incorporated the company and began R&D on AI voice models. Throughout 2022 they operated in stealth, assembling a small team of audio-AI researchers and building prototype models capable of high-quality speech synthesis. By late 2022, their breakthroughs in voice cloning and text-to-speech prompted them to prepare a public launch. In January 2023, ElevenLabs unveiled its beta platform to the public. The launch allowed anyone to enter text and generate spoken audio in natural-sounding voices, or even create a custom voice given a short sample clip. Initially bootstrapped, the founders struggled to raise capital at first – “at the beginning of 2023, [ElevenLabs] struggled to raise a $2 million pre-seed round,” as Staniszewski recounted. Nevertheless, they secured roughly $2 million in pre-seed funding in early 2023 (with participation from early believers including Andreessen Horowitz). That infusion enabled the team (just 5–8 people at the time) to scale up the platform’s infrastructure and research. Traction came quickly once the beta went live: within six months, ElevenLabs amassed over 1 million registered users who generated 10 years of audio content on the platform. This viral growth and the clear product-market fit helped attract larger investors by mid-2023.

By June 2023, ElevenLabs announced a $19 million Series A financing led by notable tech figures Nat Friedman (former GitHub CEO), Daniel Gross, and Andreessen Horowitz, valuing the company at around $100 million post-money. With fresh capital, the company accelerated development and began launching new features (such as an AI speech detector and tools to instantly create audiobooks). The team also started expanding beyond London and Warsaw to a New York presence, reflecting a global ambition. In January 2024, ElevenLabs raised an $80 million Series B co-led by Andreessen Horowitz, Nat Friedman and others, which catapulted its valuation to unicorn status (≈ $1.1 billion) less than a year after the beta launch. By this time the startup had grown to ~40 employees and opened new offices in London, New York, and Warsaw. The founders remained focused on their original mission even as use cases multiplied. “The goal hasn’t changed – the possibility to create content in every language, in every voice, in every sound,” Staniszewski said in mid-2024. In late 2024, ElevenLabs was selected for the Disney Accelerator program to explore applications of its tech in entertainment, like high-quality dubbing for films. Finally, in January 2025 the company closed a $180 million Series C (co-led by a16z and Iconiq) at a $3.3 billion valuation. By this point ElevenLabs employed around 120 people across its hubs and was being hailed as an emerging leader in generative-AI audio.

Mission and vision: ElevenLabs positions itself first and foremost as an AI research-driven company with a bold mission: “to build the singular most comprehensive audio-AI platform in the world, solve AI audio intelligence, and make all information accessible in any voice, language, and sound.”. In simpler terms, the founders want to enable a future where interacting with technology via voice is as natural and ubiquitous as text or visuals. They often cite the analogy of being an “OpenAI for audio,” laser-focused on the audio modality that others overlooked. Staniszewski notes that while many AI firms chase large language models or vision, “there aren’t too many companies that focus solely on audio” – leaving a gap ElevenLabs is eager to fill. The origin story of fixing Polish dubbing grew into a broader vision: to eliminate language barriers in media and communication. In an interview, Staniszewski described dreaming of “content in every language, in every voice” and even real-time voice translation of conversations or media. Underlying this is a belief that voice is the most fundamental human interface. “Speech is how we naturally communicate… digital interactions should happen by voice – fluid, natural, effortless as a conversation,” he said upon the Series C raise. This philosophy guides ElevenLabs’ strategy of developing the core infrastructure (high-quality voice synthesis) and then applying it to as many use cases as possible, from talking books to AI customer-service agents. The company’s commitment to responsible AI also features in its mission: both founders are described as being “humble, thoughtful and attentive to AI safety” in their approach. In summary, ElevenLabs’ founding story is one of two innovators turning a niche personal pain point into a global opportunity – leveraging advances in deep learning to let anyone’s voice be replicated or translated with high fidelity. Their mission now encapsulates a sweeping goal: to make voice AI a ubiquitous utility that empowers creators, businesses, and consumers around the world.

Product & Technology Stack

Core voice-AI models and capabilities: ElevenLabs’ platform is built on proprietary deep-learning models for speech synthesis that are widely regarded as state-of-the-art in quality and versatility. At its core, the company developed an AI text-to-speech (TTS) model that can generate remarkably human-like speech from written text, capturing subtleties like natural pauses, intonation, emotion, and even breaths. This model underpins the flagship Speech Synthesis service, which offers over a dozen default AI voices and the ability to create custom voices. Uniquely, ElevenLabs’ voice-cloning system can produce a digital voice clone from just a one-minute audio sample of a real person. Users can upload a short clip of someone speaking, and the model creates a synthetic voice that closely mimics the speaker’s timbre, accent and speaking style. This low-data requirement for high-fidelity cloning is a key differentiator – traditionally, training a custom TTS voice (e.g., with Google or Amazon’s tools) required hours of recorded audio, whereas ElevenLabs compresses the task into seconds while preserving realism.

Under the hood, the company’s research team (a small, elite group of audio-AI researchers as of 2024 ) has developed several specialized models in addition to baseline TTS. One is a Speech-to-Speech (S2S) model, also called a voice-conversion model, which can take an existing recording of speech and transform it into another voice – preserving the original content and intonation but in a different speaker’s voice or a different language. For example, a news broadcast in English could be converted into Spanish while retaining the original newscaster’s voice and tone, using a combination of transcription, translation, and voice cloning. This powers ElevenLabs’ AI Dubbing tool, which as of 2023 could automatically translate and dub audio/video content into 29 languages while keeping the original speaker’s voice and emotions. It effectively enables cross-language dubbing at scale – a direct realization of the founders’ initial goal. Another component is the Voice Design system: instead of cloning an existing voice, users can generate entirely new synthetic voices by adjusting parameters or even providing a text description of the voice’s traits. This allows creating a custom voice (e.g., “a warm female narrator voice in her 40s with a British accent”) without any real voice sample needed, which is valuable for content creators who want unique voices for characters or branding.

Beyond voices, ElevenLabs has expanded its audio-AI research into adjacent areas. In late 2024 it introduced a Sound Effects Generation model that can create sound effects from text descriptions (e.g., “footsteps on wooden floor”). This signals an expansion beyond speech into general audio synthesis, aligning with the mission to handle “any… sound” via AI. The company also built its own speech-to-text (STT) module to support its conversational AI offerings – while STT is a more commoditized space, ElevenLabs sees value in tailoring speech recognition for its use cases and languages (especially where off-the-shelf STT underperforms). Staniszewski noted that for many languages, existing speech recognition is “pretty bad,” so they are developing better STT to integrate with their voice generation for full-duplex voice interactions.

All these models are delivered with a strong emphasis on speed and scalability. ElevenLabs optimized a fast inference engine for its voices – its new “Flash” voice model achieves as low as 75 milliseconds latency, making real-time voice conversations feasible. It also offers a “Turbo” model optimized for responsiveness when low latency is needed (at some trade-off in complexity). These improvements are crucial for applications like live conversational agents or interactive gaming. The company has noted achieving “sub-1-second latency” even while maintaining human-level quality. To accomplish this, ElevenLabs developed advanced audio compression and representation techniques that drastically reduce the size of voice data (100× more efficient than MP3, according to internal figures). This allows them to transmit and process voice signals quickly without losing the nuances that make them sound human.

Product suite and platform: Building on its core models, ElevenLabs has assembled a comprehensive suite of products and APIs. The user-facing Web App (launched as beta in Jan 2023) is a simple interface where users can input text and choose a voice to generate audio, or upload a voice sample to create a custom cloned voice. Sliders let users adjust parameters like intonation, emotion, cadence and other vocal characteristics, giving fine control over the output. This granularity is a competitive advantage – users can, for instance, make a voice sound more excited or somber as needed, features often lacking in generic TTS services. The web platform also introduced a “Projects” feature for editing long-form audio: users can generate entire audiobooks or podcasts and then tweak sections of the audio (much like a document editor) for pacing or tone. In 2024, ElevenLabs launched Studio, a more advanced production tool that provides a Google-Docs-like environment for long-form spoken-content creation. Studio allows combining text and audio editing, regenerating sentences or sections on the fly, and coordinating multi-speaker content – catering to publishers and authors who need to produce audiobooks or narration with a high degree of control.

For developers, ElevenLabs offers a full API and SDK so that its voice capabilities can be embedded in other applications. This API has been widely adopted: dozens of startups and projects (from AI assistants to news-reader apps) use ElevenLabs under the hood for voice generation. Notably, the platform is used by AI-content companies such as Synthesia (video avatars), HeyGen, and others to give voice to their avatars or chatbots. The API’s popularity stems from ElevenLabs being a one-stop shop for high-quality voices without requiring developers to build or train models themselves.

Another key product is the Voice Library & Marketplace. This feature, launched in alpha in early 2024, created a marketplace where voice actors or users can share their own voice clones and earn royalties when others use them. The Voice Library contains both AI-generated voices and verified replicas of real people’s voices. For example, a voice actor can upload samples of their voice, create an AI clone, and offer it for licensing through the platform. When a customer uses that voice to generate audio, the original creator gets a portion of revenue as compensation. By April 2025, over 5,000 voices had been shared in the library and more than $2 million in payouts had been earned by voice creators. This marketplace approach is ElevenLabs’ attempt to align with the professional voice community and “harmonize AI advancements with established industry practices”. It addresses ethical concerns by giving voice owners control: they must verify their identity via a voice captcha and manual checks before a voice is listed, ensuring consent and authenticity. The marketplace also illustrates ElevenLabs’ platform strategy – encouraging a network effect where more voice options attract more users, which in turn generate more compensation for voice providers, creating a virtuous cycle and competitive moat.

Rounding out the product stack, ElevenLabs launched a mobile app called ElevenReader in late 2024. This app (on iOS/Android) can convert written content like news articles, PDFs, or e-books into spoken audio using the user’s choice of voices. It effectively acts as a personal AI narrator for all digital text. Initially offered with a free trial period, ElevenReader is a direct-to-consumer play aiming to bring ElevenLabs’ tech to everyday users (commuters, visually impaired users, etc.) as an “AI audiobook” for any content. This was the company’s first true consumer-facing product, marking an expansion beyond its primarily B2B and creator-oriented tools. The move was likely a response to demand from individuals who want to listen to content in custom voices, as well as a strategic attempt to build a direct user base that doesn’t solely rely on third-party developers or enterprise clients for distribution.

Key differentiators vs. competitors: ElevenLabs has risen rapidly by carving out a niche where big tech and other startups had not fully concentrated: high-quality, expressive voice synthesis as a dedicated focus. Unlike Amazon, Google, or Microsoft – who all have TTS offerings but treat them as one feature among many in larger cloud platforms – ElevenLabs poured all its R&D into pushing the frontier of voice quality and flexibility. This specialization has yielded superior voice quality, according to analysts, often described as more natural and emotive than the standard voices from big tech. For example, Google’s DeepMind unit demonstrated an advanced audio model called AudioLM in research labs, but Google has not productized anything with the level of open access and ease of use that ElevenLabs provides. Microsoft’s neural TTS can clone voices but is gated behind lengthy approval processes to prevent misuse, and it typically requires 30+ minutes of training data – making it impractical for casual or small creators. ElevenLabs, by contrast, offers instant cloning with minimal data through a simple web interface, attracting a huge community of creators and developers early on.

The timing of ElevenLabs’ launch also rode the wave of generative-AI hype spurred by OpenAI’s ChatGPT in late 2022. In 2023 there was “a frenzy of investor interest” in generative-AI companies across modalities, and ElevenLabs benefited from being one of the few with a polished product in the audio domain. Its branding as an “AI audio platform” focusing on voices helped it stand out. Even the founders acknowledge that OpenAI could become a formidable competitor if it turns its attention to audio, given OpenAI’s vast resources and talent. But so far, OpenAI has not released a dedicated voice product – their efforts have been more text- and image-focused – and Staniszewski believes OpenAI is less likely to match ElevenLabs on the niche use cases requiring extreme quality or control. “They [OpenAI] might not focus on [audio] as a vertical… their quality will get better and for some use cases it might be enough. In use cases where there is less control or less quality needed, but price is important, OpenAI will probably be a bigger competition,” he said. This hints at ElevenLabs’ strategy to compete on quality and feature richness (fine control, emotional range, etc.) even if generalist AI players offer basic voice synthesis cheaply. Indeed, ElevenLabs’ pricing is higher than some basic TTS APIs (its cost per minute is about 5× that of a smaller rival called Cartesia), but customers seem willing to pay a premium for the superior fidelity and capabilities.

Another differentiator is the breadth of ElevenLabs’ platform. Competitors often tackle slices of the voice-AI market: e.g., Papercup and Deepdub focus on video dubbing, Respeecher specializes in high-end voice cloning for studios, WellSaid Labs provides a library of synthetic voices for corporate content, etc. ElevenLabs is attempting to do it all under one roof – TTS, voice cloning, dubbing, real-time agents, even sound effects – and to serve every segment (creators, publishers, enterprises, consumers) on one platform. This “full-stack audio AI” approach is ambitious, but if executed, it creates a powerful network. For instance, an indie author can use the same platform to generate an audiobook narration, distribute it via ElevenLabs’ partnership integrations, and even select a voice from the marketplace, all within one ecosystem. No direct competitor currently offers this level of end-to-end solution in voice AI.

Lastly, ElevenLabs has accumulated proprietary data and IP by virtue of its scale. With millions of user-generated audio clips and 1,000+ years of audio content created on its platform to date , the company has a valuable dataset to further train and refine its models (while presumably respecting privacy and usage policies). It also employs advanced safeguards like hidden digital watermarks in generated audio to identify its output – a technical approach that not only addresses safety (see later section) but could become an industry standard if mandated. In terms of formal IP protection, ElevenLabs has filed trademarks for its brand and likely has trade-secret know-how in its model architectures, though no specific patents have been publicized. The rapid development cycle of AI means the company has prioritized research speed over patenting. Still, its head start in voice-model performance (especially for low-resource languages and instant cloning) is a defensible advantage as of 2025.

Funding History & Cap Table

ElevenLabs’ fundraising trajectory has been steep and fast, mirroring the broader investor enthusiasm for generative AI startups. Below is a breakdown of its funding rounds, investors, and valuation milestones, along with implications for ownership stakes:

Pre-Seed (2022/early 2023): The founders initially raised approximately $2 million in a pre-seed/angel round around January 2023, after initial challenges convincing investors prior to beta launch. The pre-seed valuation is estimated around ~$10–11 million based on reported subsequent valuations. Notable participants included Credo Ventures, and Angel Investors - Alex Macdonald, Bartek Pucek.

Seed/Series A (June 2023): ElevenLabs raised a $19 million Series A at approximately a $100 million post-money valuation. The round was co-led by Nat Friedman, Daniel Gross, and Andreessen Horowitz. Additional angel investors included Mike Krieger (Instagram), Brendan Iribe (Oculus), Mustafa Suleyman (DeepMind), along with funds Credo Ventures and Concept Ventures. Strategic investors Storytel and TheSoul Publishing also participated. Proceeds supported accelerated R&D and new product launches.

Series B (January 2024): A $80 million Series B valued ElevenLabs at ~$1.0–1.1 billion, achieving unicorn status. The round was again co-led by Andreessen Horowitz, Nat Friedman, and Daniel Gross. Major VCs including Sequoia Capital and SV Angel joined, alongside Smash Capital, BroadLight Capital, and Credo Ventures. Cumulative funds raised reached approximately $101 million.

Series C (January 30, 2025): ElevenLabs raised a $180 million Series C, tripling its valuation to $3.3 billion post-money. This round was co-led by Andreessen Horowitz and ICONIQ Growth. New institutional investors included NEA, World Innovation Lab, Valor Capital, Endeavor Catalyst, and Abu Dhabi’s Lunate. Existing investors like Sequoia, Salesforce Ventures, SV Angel, and Smash Capital maintained their stakes. Strategic corporate investors such as Deutsche Telekom, LG Tech Ventures, HubSpot Ventures, NTT Docomo Ventures, and RingCentral Ventures joined, highlighting partnership potential. The Series C brought total funding to $281 million.

Convertible Notes/Other Instruments: There’s no public indication of major convertible notes or SAFE rounds beyond the mentioned equity rounds. Rapid valuation appreciation negated the need for prolonged bridge financing.

Financial & Operating Metrics

As a privately-held startup, ElevenLabs does not disclose full financial statements. However, various reports and company communications provide insight into its key metrics. By mid-2025, the company demonstrates strong growth momentum in both usage and revenue, albeit with the high expenses typical of AI companies. Below we outline the best available information on revenue, growth, margins, customer base, and other operating indicators, along with clearly labeled estimates where exact figures are not public:

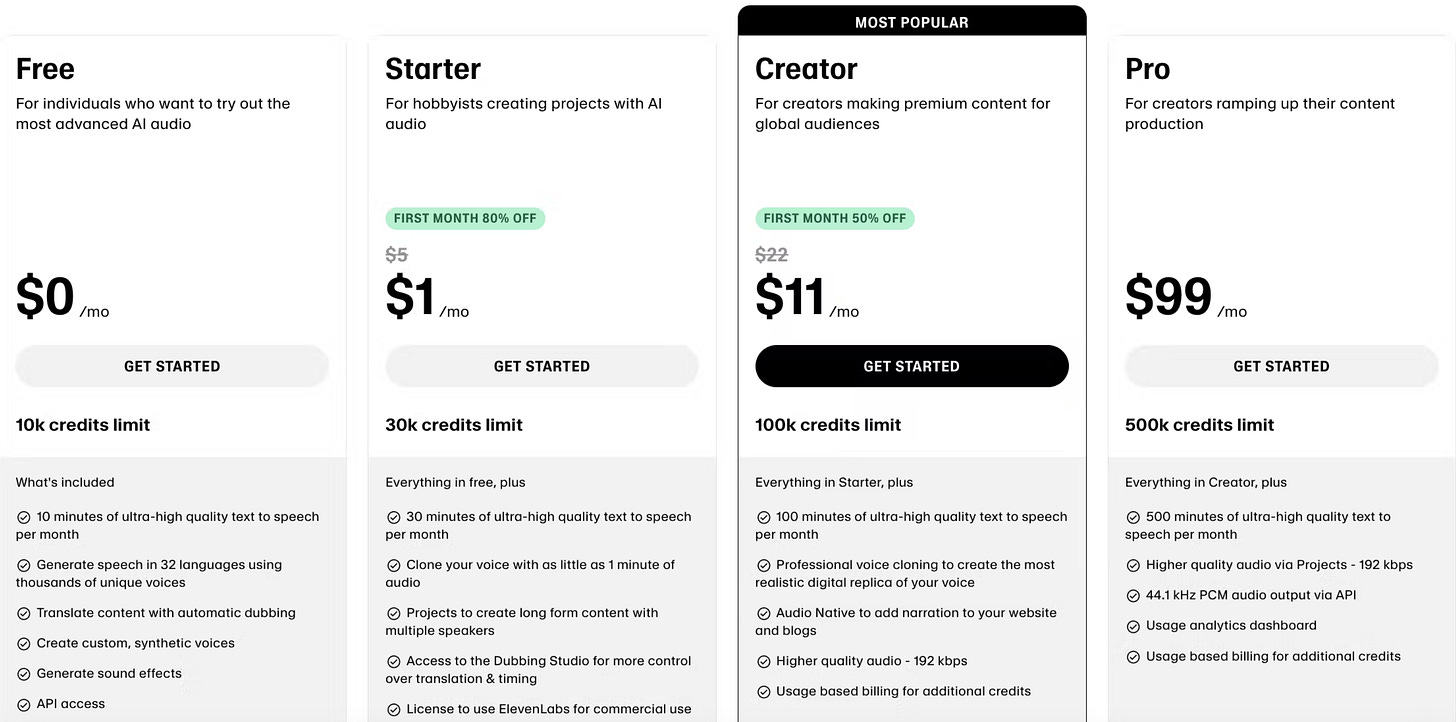

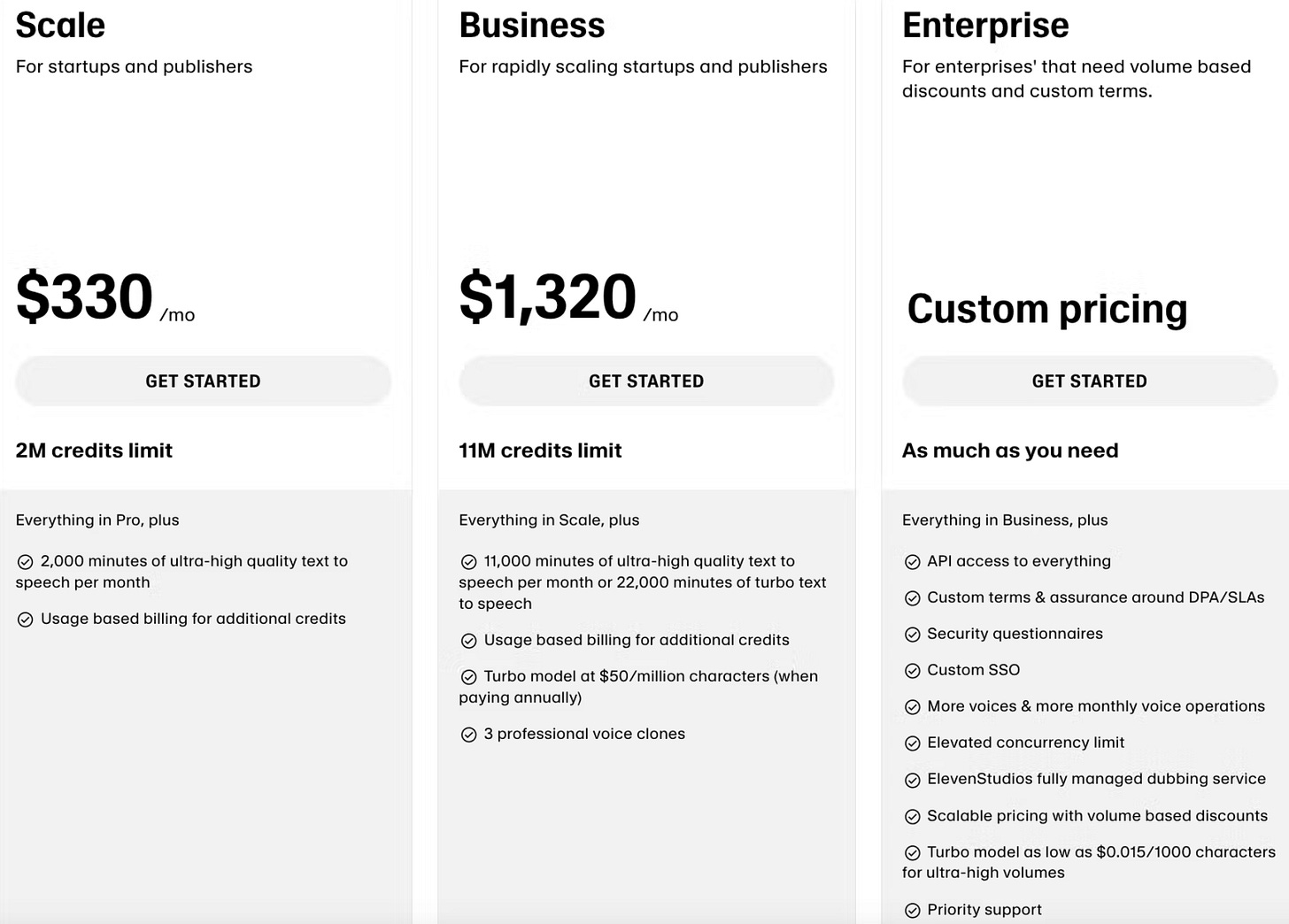

Revenue and growth: ElevenLabs' revenue comes from self-serve subscription plans and enterprise contracts for its voice API and tools. According to industry analysis by Sacra, ElevenLabs reached approximately $90 million in annual recurring revenue (ARR) as of October 2024. This is up dramatically from about $25M ARR at the beginning of 2024, representing ~260% growth in just nine months. By early 2025, the annual run-rate likely exceeded $100M. The Series C was done at roughly a 35x ARR valuation ($3.3B), suggesting strong investor optimism. Revenue composition includes tiered subscription plans and usage-based billing, with freemium options and paid plans ranging from $5-$22/month. Enterprise deals typically involve usage-based or volume-based pricing.

Gross margin: ElevenLabs likely enjoys high gross margins (~70-80% estimated for 2024) due to its software/API business model. Despite intensive AI inference workloads leading to substantial cloud computing costs, the company reportedly achieved profitability at the operating level by late 2024. Optimizations such as efficient model architectures ("Turbo," "Flash") and reserved cloud capacity contribute to healthy margins.

Burn rate and profitability: Despite significant growth investment, ElevenLabs has been reported as efficient and potentially profitable or breakeven by late 2024. The $180M Series C funding raised in January 2025 likely supports aggressive expansion rather than loss coverage. The company's moderate burn rate in 2024, possibly single-digit millions per month, is covered by ongoing revenue and new capital injections.

Customer base and usage metrics: ElevenLabs rapidly acquired users, reaching over 1 million registered users within five months of its 2023 launch. By early 2024, usage had expanded to millions of users generating extensive audio content, totaling approximately 1,000 years by January 2025. Notably, employees at over 60% of Fortune 500 companies have interacted with its platform, suggesting broad organic adoption. Major enterprise clients include HarperCollins, The Washington Post, Paradox Interactive, TIME Magazine, The New Yorker, The Atlantic, Bertelsmann, Publicis Groupe, Star Sports, Aston Martin’s F1 team, and NVIDIA.

Enterprise deals and ARPA: High-profile enterprise partnerships significantly contribute to ElevenLabs' revenue. Collaborations with companies such as Storytel, TheSoul Publishing, Audacy, and participation in Disney Accelerator suggest lucrative and scalable revenue streams. Average revenue per account (ARPA) ranges widely, with enterprises likely contributing substantial six- or seven-figure annual contracts, driving increased revenue per API call.

Geographic and segment mix: Initially popular among English-speaking online creators in North America and Europe, ElevenLabs expanded to support 32 languages and accents, targeting markets in Asia, Latin America, Poland, and India. Media, entertainment, gaming, publishing, and technology sectors remain significant revenue drivers, with emerging segments including education, healthcare, and customer service AI.

Notable usage milestones: ElevenLabs' platform experienced rapid adoption, generating 100+ years of audio by January 2024, increasing tenfold to 1,000 years within the following year. High engagement levels are reflected in localized audio content and conversational AI builder adoption, with significant integrations like Perplexity.ai and TIME Magazine further expanding its user base and usage volume.

Go-to-Market & Partnerships

From early on, ElevenLabs pursued a dual go-to-market (GTM) strategy: a self-service model targeting creators and developers globally, and a focused enterprise/business-development approach targeting key industries like media, gaming and publishing. This combination has enabled both bottom-up adoption (individual users bringing the tech into organizations) and top-down partnerships (official deals with companies). Below we outline the main GTM channels and notable partnerships:

Self-serve distribution (Web, API, SDKs): ElevenLabs’ initial GTM was product-led and viral. By launching a public web platform with a freemium tier, it allowed anyone curious about AI voices to try it immediately. This led to a groundswell of creators using it in projects and sharing results online, effectively marketing the product through user-generated content. For example, independent authors started using ElevenLabs to create audiobook narrations of their books, and YouTubers used it to voice characters, spreading awareness in creative communities. The web platform’s simplicity (just type text and choose a voice) and compelling output made it shareable. Social-media buzz (sometimes around both the positive use cases and the controversial ones) gave ElevenLabs global exposure without traditional ad spend. By the time the company introduced paid plans, it already had a million users on the platform thanks to this open-access strategy.

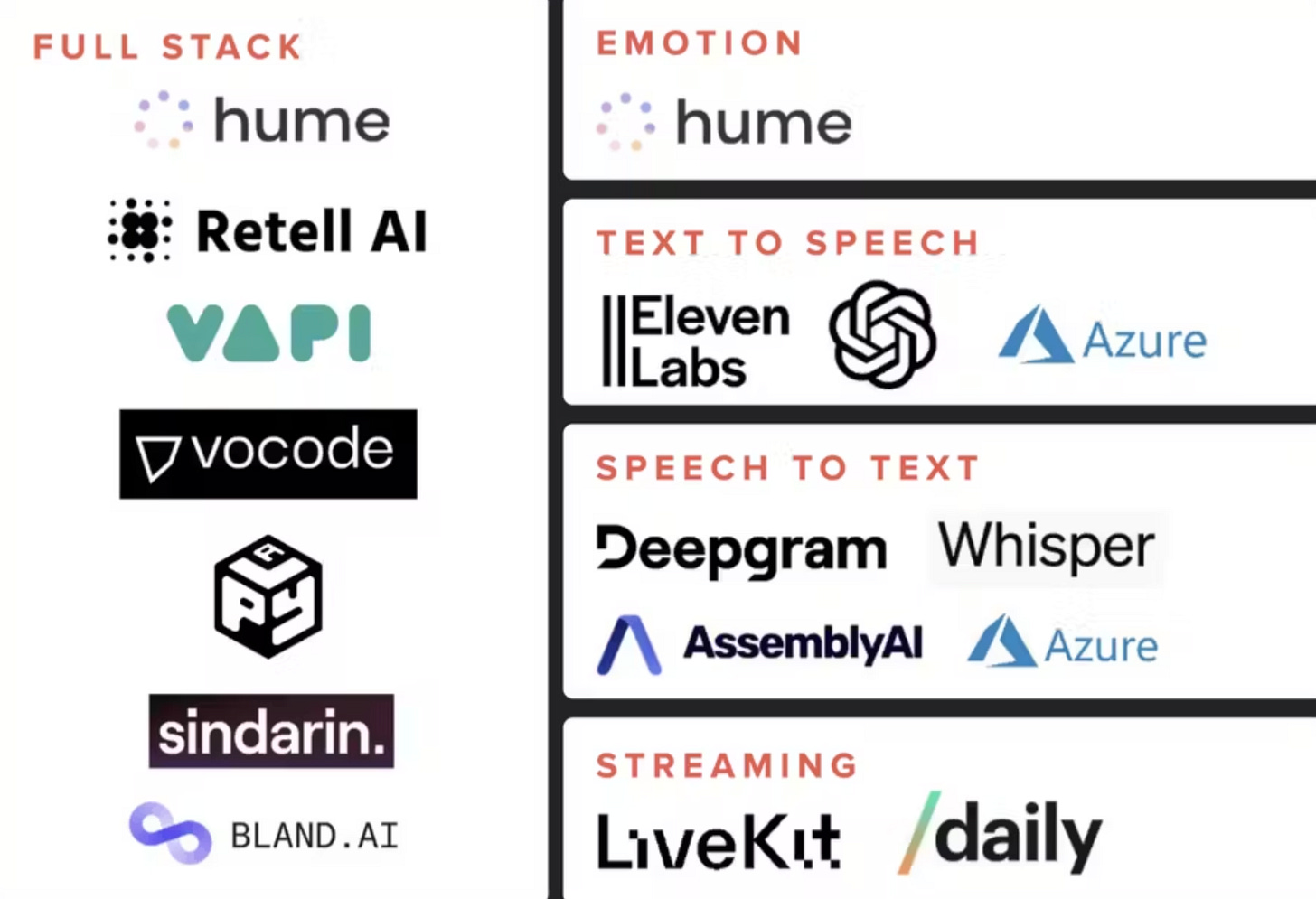

Simultaneously, ElevenLabs offered developer-friendly APIs and SDKs from an early stage. This tapped into the wave of AI integration; many developers building apps or services in 2023–24 wanted to add voice capabilities, and ElevenLabs provided a plug-and-play solution. The API allowed programmatic generation of speech, which was rapidly adopted in niches like: AI avatars (e.g., Synthesia integrating ElevenLabs for voice of video avatars), AI assistants (Perplexity adding spoken answers), chatbots (many chatbot projects let users choose ElevenLabs voices for outputs) and voice bots for games. The developer community even built unofficial wrappers and plugins (for example, a popular Unreal Engine plugin to use ElevenLabs voices in game development, and community projects on Reddit integrating ElevenLabs into custom AI assistants) – effectively spreading within tech circles. This bottom-up integration strategy meant that end-users might encounter ElevenLabs voices in various products without initially knowing it (white-labeled via API), which can then lead them to the platform itself if they investigate. According to TechCrunch, by January 2025 “dozens of major publishers and content creators across media and gaming, as well as a number of tech startups, were using ElevenLabs’ technology to power their voice features.”. Many of those partnerships began with a developer just testing the API. To further cultivate this channel, ElevenLabs launched an Affiliate program and a Commercial Partner program, likely to incentivize agencies or dev shops to use its tech.

Target verticals and enterprise sales: On the enterprise front, ElevenLabs identified early that certain industries were particularly ripe for voice-AI transformation: publishing (audiobooks, news), entertainment (film dubbing, TV, animation), gaming (character voices) and localization (translation and dubbing). The company explicitly has solution pages for Publishing, Media & Entertainment, Conversational AI, etc., and it has tailored products like Dubbing Studio to fit those vertical workflows. The go-to-market approach has been to secure marquee partnerships in each vertical to validate the tech, and then expand.

In publishing, a landmark partnership was with Storytel (one of the largest audiobook services globally) signed around mid-2023. Through this, ElevenLabs became an engine for quickly generating audiobooks from text, helping Storytel and other publishers turn backlogs of text into spoken content at scale. They also partnered with digital-article-audio providers like Curio (which offers narrated articles). By late 2024, TIME, The Washington Post, The Atlantic, HarperCollins and The New Yorker were all working with ElevenLabs in some capacity to voice their content. For these customers, the value prop is both cost and speed – instead of hiring voice actors for every article or book, they can generate quality narration on demand.

In gaming, ElevenLabs pursued both Western and Asian markets. It signed Paradox Interactive (developer of Cities: Skylines) in 2023, and Embark Studios as an investor/partner. These studios use the tech for prototyping game-character dialogue or even final in-game voice-overs for less-central roles, saving time and expense. In 2024, NetEase, a major Chinese gaming company, also became a partner – indicating expansion into China’s huge gaming market. ElevenLabs also collaborates with AI-startup Inworld AI (which creates AI-driven game characters). By listing these gaming clients in press releases, ElevenLabs demonstrates to the industry that its solution is production-ready for games.

In media and localization, the partnership with TheSoul Publishing (a giant digital-content producer on YouTube/Facebook) showed that even non-traditional media embraced AI voice for multilingual content. Another key partnership in 2024 was with Audacy, one of the largest US radio-broadcasting groups. Audacy is using ElevenLabs to create synthetic voices for DJs, advertisements and to “augment programming.” Audacy’s EVP of Programming called the partnership “transformative … streamlining workflows and empowering creators”. ElevenLabs’ VP of Revenue said it is “bringing new voices to life, making radio more diverse and accessible”.

In the localization/dubbing space, ElevenLabs competes with startups like Papercup and Deepdub by offering its own dubbing-studio tool. The company reported “conducting tests with industry partners to enable AI dubbing at scale” in 2023. Through the Disney Accelerator, ElevenLabs worked with Disney’s localization teams on dubbing-quality pilots. Even without a formal Disney investment, that relationship is a valuable stamp of approval.

Channels and distribution partnerships: ElevenLabs also leverages channel partners. It launched an Impact Program that offers its API free to nonprofits, educational orgs and cultural institutions (80 partnerships by 2025). This seeds the tech in universities, libraries and NGOs, and supports accessibility projects (e.g., providing voices to 1,000+ individuals with speech impairments). Strategic investors like NTT Docomo Ventures (Japan) and Deutsche Telekom (Germany) point to telco integrations; ElevenLabs has said it is partnering with telcos for conversational-AI agents over phone lines.

Another distribution vector is cloud platforms and AI marketplaces. Google Cloud customers can apply committed-use credits to ElevenLabs usage, lowering adoption friction. Appearances in marketplaces like AWS Marketplace are likely as well. Participation in accelerators (Disney, HubSpot Ventures, etc.) further extends reach into those ecosystems.

Marketing and branding: Marketing is largely thought-leadership and community-driven. Cofounders Staniszewski and Dąbkowski give interviews (e.g., Sifted brunch, The Economist podcast), highlighting human-interest stories: helping a cancer patient speak again with her cloned voice , or letting students practice languages with native-accent AI partners. ElevenLabs also runs an active online community (Discord, etc.) where enthusiasts share voice samples and tips, generating new use cases organically.

Competitive Landscape

The AI voice synthesis market has grown crowded, with players ranging from tech giants to specialized startups. ElevenLabs sits in a competitive landscape where it must contend with both Big Tech (general AI platforms with voice features) and smaller companies focused on synthetic voice or dubbing.

Below we present a SWOT analysis of ElevenLabs relative to its top competitors, along with profiles of five key rivals.

Strengths (of ElevenLabs relative to others):

Best-in-class voice quality and versatility: ElevenLabs is widely regarded as having the most natural and expressive AI voices available commercially. Reviewers note its speech outputs capture emotions and human-like delivery better than competitors like Google Cloud’s TTS or Amazon Polly, which often still sound robotic. This quality edge comes from ElevenLabs’ singular focus on audio research, yielding proprietary models that outshine big-tech models where audio is secondary. It supports 32 languages and numerous accents with consistently high quality, while many rivals support fewer languages or see quality drop-off outside English. ElevenLabs’ voices also convey nuanced intonation and context (pausing at commas, raising tone for questions, etc.) exceptionally well—an advantage that attracts customers who need top-tier quality (e.g., audiobook publishers and high-end video production).

Fast-paced innovation and comprehensive product suite: ElevenLabs has rolled out new features (dubbing studio, voice design, sound effects, and more) in rapid succession. Its agility as a startup outmatches large competitors that update slowly. Crucially, it offers a one-stop platform—text-to-speech, voice conversion, voice cloning, and editing tools—all in one ecosystem. Many rivals provide only one of these services (for instance, some startups do cloning but not TTS, while big cloud providers do TTS but not easy cloning). The addition of a voice marketplace and direct consumer app broadens ElevenLabs’ reach beyond what most rivals have.

Strong community and brand recognition in AI voice: Through viral marketing and attentive response to user feedback, ElevenLabs has built a passionate user base. Its brand is now synonymous with cutting-edge voice AI, whereas many competitors (especially smaller ones) remain little-known. Prominent VC backing and “unicorn” status bolster its credibility when pursuing enterprise deals. The company’s public stance on ethics and safety—plus high-profile efforts to combat misuse (such as its AI speech-classifier tool)—help position it as a responsible leader in the field, an advantage when dealing with media companies or governments wary of deep-fake technology.

Weaknesses (challenges relative to competitors):

Limited proprietary data compared to Big Tech: ElevenLabs has amassed a large corpus of generated audio and user-provided samples, but it cannot match the vast speech datasets that companies like Google, Microsoft, or Amazon possess. Big Tech firms also enjoy access to unique data sources (e.g., YouTube transcripts or Cortana audio logs). ElevenLabs has had to rely partly on publicly available data (audiobooks, podcasts), raising potential legal questions about consent. If rivals leverage bigger datasets to improve accuracy or multilingual breadth, ElevenLabs could lose ground.

Higher cost structure and pricing: ElevenLabs’ quality edge comes at a computational cost, and its service is priced well above many TTS alternatives. For example, it can be roughly five times more expensive per minute than a basic competitor like Cartesia for raw TTS API calls. Price-sensitive customers with huge volume (e.g., call centers) may opt for cheaper—if slightly lower-quality—options. While ElevenLabs has introduced “Turbo” models to cut compute costs, margins could come under pressure as it scales.

Small organization vs. giants: ElevenLabs had about 120 employees as of early 2025, whereas competitors like Google or Microsoft devote entire divisions to speech technology. This disparity means ElevenLabs must carefully choose its battles and could be outpaced if a tech giant decides to focus intensely on surpassing ElevenLabs in voice naturalness. Some conservative enterprises may also prefer an established vendor such as Microsoft or Amazon due to perceived stability and easier integration.

Reliance on third-party platforms: A significant share of ElevenLabs’ usage comes through integrations into other apps and services. If major partners adopt an in-house solution or switch to a rival, ElevenLabs could lose a substantial volume of API calls. Likewise, platform owners (e.g., Apple or Google) could restrict third-party voice tech in favor of their own features, limiting ElevenLabs’ reach.

Opportunities:

Surging demand for voice across industries: Audiobook consumption is growing (about $5 billion in 2023, projected to $35 billion by 2030), and ElevenLabs is positioned to capture production work. Video localization, global streaming, and online content creators all need multilingual dubbing. In enterprise, call-center and customer-support markets—worth hundreds of billions globally—are ripe for conversational AI with natural voices. Healthcare (assistive tools, AI companions) and education (AI tutors, language learning) are also sizable markets starting to adopt synthetic voice. ElevenLabs already has pilots in these verticals, indicating ample growth headroom.

Geographic expansion: Much traction to date has been in English and a few major languages. There is enormous opportunity in under-served languages and dialects—especially in Asia (Chinese, Japanese, Hindi, etc.), the Middle East, and Africa. ElevenLabs’ Series C plan emphasizes covering “all languages, accents, and dialects” and expanding teams in Asia, LATAM, and EMEA. Being first to deliver high-quality voice cloning in languages such as Hindi or Arabic could unlock large new user bases and government or broadcaster contracts.

Integration with complementary AI systems: ElevenLabs can become the default voice layer for any AI system that needs to talk—pairing with large language models for conversational agents, powering AR/VR avatars, smart-home devices, or personal AI assistants. Investors call voice “our most natural interface,” and ElevenLabs is building foundational tech for that ecosystem. Personalized AI companions, dynamic audio ads, and voice-enabled IoT devices all represent growth avenues.

Enterprise SaaS upsell: Beyond raw voice generation, ElevenLabs can move up-market with turnkey solutions—e.g., an AI audio CMS for publishers or an AI voiceover studio for animation firms. Packaging its tech into vertical SaaS products captures more value and stickier subscription revenue. Its Studio editor for long-form audio is one example, and more specialized offerings (e.g., “AI DJ/host” for radio, “call center in a box” with partners’ STT and dialog systems) could command six- or seven-figure annual contracts.

Partnership or acquisition by Big Tech: A deep partnership—or eventual acquisition—by a tech giant remains a lucrative path. All major firms (Amazon, Apple, Google, Microsoft, Meta) prize voice technology; if ElevenLabs maintains its lead, one of them could invest or acquire to accelerate their own roadmaps.

Threats:

Competition from Big Tech entrants: Should OpenAI, Google, Amazon, Microsoft, or Meta release equally capable voice products, ElevenLabs’ edge could erode quickly. OpenAI, for instance, could deploy voice generation to its massive ChatGPT user base. Tech giants possess greater resources, integrated ecosystems, and existing enterprise relationships, letting them catch up or undercut on price.

Emergence of open-source models and commoditization: Free, open-source voice-cloning projects (e.g., Coqui TTS, VITS) are improving. If they reach near-parity quality, developers might opt for them to avoid costs or restrictions. ElevenLabs must continue to innovate and offer superior ease-of-use, support, and proprietary voices to keep its moat.

Regulatory and legal challenges: Synthetic-media regulation is tightening. ElevenLabs’ tech has been implicated in deep-fake scandals, prompting lawmakers to consider strict disclosure and liability rules. Pending EU AI Act provisions and U.S. state laws could impose onerous compliance requirements or usage limits, potentially hampering convenience and adoption. Lawsuits from voice actors and authors over training data could force model retraining or damages. A major misuse incident could trigger bans or severe restrictions.

Talent and hiring competition: ElevenLabs competes with Google DeepMind, Meta, OpenAI, and other well-funded startups for specialized audio AI talent. Losing key researchers or failing to hire enough new experts could slow innovation. Rapid head-count growth also risks diluting culture and focus.

Reliance on partners and platforms: Heavy dependence on integrations exposes ElevenLabs to partner churn and platform policy shifts. A partner switching providers, or a platform owner favoring its own voice tech, could reduce usage or cut off distribution, affecting revenue.

Regulatory, Ethical, and IP Risks

The rise of ultra-realistic voice cloning technology has triggered a host of ethical and legal concerns. ElevenLabs, at the forefront of this technology, faces significant risks in this domain. The company has encountered real-world misuse of its platform and operates in an environment of increasing regulatory scrutiny of generative AI. Key issues include voice-cloning misuse and deepfakes, emerging regulations, intellectual property (IP) concerns, and litigation or enforcement actions to date, along with ElevenLabs' responses.

Voice-cloning misuse & deepfake incidents: Shortly after ElevenLabs’ public debut, malicious actors abused the technology. Early misuse included hateful and harassing audio clips mimicking celebrities like Emma Watson and Joe Rogan, containing threats and racist remarks, highlighting the technology's potential for misuse. Additionally, a cloned voice successfully fooled a bank’s voice authentication system, raising fraud concerns. In early 2024, a deepfake robocall of U.S. President Joe Biden discouraged voters from participating in a primary, widely suspected to have been created with ElevenLabs’ technology, though never explicitly confirmed by the company. Similar audio disinformation occurred during the 2024 election cycles in Europe and the U.S., and a Russian propaganda operation utilized ElevenLabs’ product to produce fake news audio.

These incidents underscore the weaponization potential for deception, political disinformation, harassment, and fraud, negatively impacting ElevenLabs’ brand and creating distrust among the public and policymakers.

Regulatory environment and deepfake laws: In response to concerns:

The European Union’s AI Act (anticipated by 2025) classifies voice cloning as a "high-risk" application, requiring transparency (watermarking, disclosure of AI-generated content), safeguards, or certification.

In the U.S., states like California and Texas prohibit specific deepfake uses, particularly in elections and pornography. The FTC warned penalties for negligent AI companies if their products enable scams or fraud.

China mandates labeling AI-generated media and obtaining consent for using someone’s likeness, exemplifying stringent controls potentially influencing global regulations.

Law enforcement agencies expressed concerns about voice cloning's criminal misuse, possibly prompting regulation to restrict features or mandate sharing information with authorities.

ElevenLabs advocates for regulation penalizing illicit generative voice use and maintains that governments must ensure fairness by regulating bad actors globally.

Company’s safety measures (watermarking, verification, restrictions): ElevenLabs addresses these risks through multiple strategies:

Developed an AI Speech Classifier tool identifying audio generated by its technology, embedding hidden digital signatures, and aligning with C2PA standards for metadata tracking.

Introduced user verification for high-risk voice cloning, limiting custom voices to verified paid users, including a voice captcha for authenticity checks.

Created a “do not mimic” list banning cloning of certain prominent political figures and celebrities to prevent sensitive deepfakes.

Implemented content moderation, monitoring user-generated audio through automated and manual processes, banning violators, and logging generated files for potential audits.

Engaged in collaborative research and partnerships to develop better detection and prevention tools for deepfakes.

Despite these measures, ElevenLabs acknowledges ongoing challenges due to the broader availability of open-source or foreign models enabling misuse.

Intellectual Property (IP) and rights issues: Voice actors and performers have expressed concerns about AI replicating their performances without consent or compensation. Audiobook narrators and voice actors initiated legal actions against ElevenLabs, alleging unauthorized use of their recordings to train AI models, potentially violating copyright and publicity rights. Legal clarity regarding training AI models on copyrighted data remains unsettled, posing litigation risks and potentially increasing operational costs.

Publicity rights are also significant, with actors' unions negotiating consent and compensation for AI-generated voice use. ElevenLabs’ initial compensation approach (platform credits) faced criticism, prompting possible shifts toward monetary royalties to satisfy legal and competitive pressures.

Litigation and enforcement to date:

Voice actors initiated legal actions against ElevenLabs for unauthorized voice cloning.

Regulatory fines or orders have not yet emerged, but U.S. authorities closely monitor potential election interference.

Ethical stance: ElevenLabs prioritizes safety and ethical usage, slowing feature rollouts to ensure robust moderation and engaging the creative community to develop acceptable frameworks. Despite this, skepticism from creators persists, pressuring the company toward monetary compensation models.

Strategic Roadmap & Hiring Plans

ElevenLabs is at a critical juncture where it must translate its rapid early success into long-term dominance. The company has publicly outlined an ambitious product roadmap and is scaling up hiring to execute on its vision. This section details known elements of ElevenLabs’ future plans, including new product features, target capabilities, and how the team and hiring will support these goals.

Product roadmap and upcoming features:

ElevenLabs’ near-term roadmap focuses on making its AI voices even more expressive, expanding language and modality support, and enabling more interactive, real-time use cases. Several specific developments have been mentioned:

More expressive and controllable voices:

ElevenLabs plans to invest heavily in research for more expressive and controllable voice AI. This means enabling finer emotional tuning, dynamic pacing, and context-aware delivery. For example, the system might automatically adjust tone based on punctuation or intended sentiment, or allow users to specify the emotion (“sad” vs. “excited”) for each sentence. ElevenLabs already offers intonation and style settings, but the aim is to push closer to human-level performance where the AI voice can laugh, whisper, emphasize certain words, or convey subtle sarcasm appropriately. Achieving this may involve integrating their voice models with large language models for better context understanding — building “omni-models” that combine text and audio for multimodal interactions. A concrete example might be an AI narrator that can read dialogue with different character voices or emotions automatically by understanding narrative context.

Multilingual and real-time translation/dubbing:

ElevenLabs’ core dream remains real-time universal translation — speaking in one language and having your same voice output in another language near-instantly. They have laid the groundwork with an AI Dubbing tool covering dozens of languages, with plans to expand to all languages, accents, and dialects. They have also expanded into Indic languages by hiring a team in India, so support for languages like Hindi, Bengali, and Tamil will likely improve. Moving dubbing from a batch process to a more real-time process is a key challenge — enabling live translation of meetings or streams where one person’s voice comes out in multiple languages concurrently. This ties into strategic partnerships with telecom giants, hinting at features for voice translation on phone calls or live broadcasts — imagine calling someone and your voice is translated into their language in your own timbre, nearly live. The vision includes making AI voices adaptive in real-time conversation — not just one-way generation — by understanding when to speak, how to pause, and how to adapt to live dialogue.

Conversational AI agents & speech-to-text integration:

In 2024, ElevenLabs launched a Conversational AI tool to help developers build voice-based interactive agents. Going forward, they plan to advance this significantly. They are working on better speech recognition so the AI can listen as well as it speaks. One roadmap item is improving speech-to-text for many languages to feed into these agents. We should expect ElevenLabs to offer a more complete voice dialogue system — essentially an SDK where an AI can listen, process with an LLM or other logic, and respond in a lifelike voice. The company aims to create new use cases for audio — customer support, education, companionship AI, and more. As a proof-of-concept, they partnered with TIME magazine to create a conversational voice bot that lets users ask questions about Person of the Year content. Future steps might include news sites where users can ask an AI voice questions about articles, or interactive fiction where you talk to characters. They also plan to deepen interactivity by enabling fluid responses and features like interruptible speech — solving challenges like AI voice bots reacting to human interruptions or emotional cues mid-conversation.

Mobile and consumer expansion:

The ElevenReader mobile app is likely just the start of consumer-facing products. Future additions might include choosing multiple voices to read a script (turning any text into a multi-voice podcast) or integration with news services. There may be a desktop or browser extension that reads web pages in a chosen voice. ElevenLabs has also teased features that would allow users to publish audiobooks on its platform with multiple voices and better localization — possibly creating a user-generated audiobook marketplace, similar to a YouTube for audiobooks but with AI narration. This points to a strategy of hosting content, which could open new revenue streams and drive usage. If they attract authors to produce audiobooks with AI and distribute them directly, ElevenLabs will move from providing tools to being a distribution platform as well.

Audio FX and beyond voice:

With the launch of a Sound Effects Model (text to sound effects), ElevenLabs signaled expansion beyond voice to general audio. The roadmap could involve adding background music or ambient sound generation to accompany voice content — for example, an audiobook with AI-generated background noises or music. They already offer a Voice Isolator to remove background noise from audio and may integrate more audio editing capabilities, like changing the acoustic environment of a voice to sound like it’s echoing in a hall. The goal is to build a comprehensive “Audio AI platform” that covers all audio content creation, not just speech. Future features could include mixing voices with sound effects, tools for adjusting intonation after generation, or adding emotional filters (e.g., “make this voice 20% more angry”).

Enterprise features:

To attract more enterprise usage, ElevenLabs will likely implement features like on-premise deployment or private model instances for large clients who do not want to send data to a multi-tenant cloud. They may offer fine-tuning or training of voices on proprietary data, such as a company’s call logs to create a unique customer service persona. They might also develop more analytics around audio — for example, providing insights on listener engagement for content voiced by AI, which could appeal to media partners.

Hiring and team growth:

ElevenLabs has ramped up hiring significantly and will continue doing so to achieve its roadmap.

Headcount trajectory:

The company grew from 8 people in January 2023 to about 40 by January 2024 and then to around 70 by mid-2024. By the Series C close in January 2025, they had about 120 employees across London, New York, and Warsaw offices. They are likely to continue aggressive hiring in 2025, aiming to double headcount to 200–250, especially with fresh funding. They have opened a new R&D center in Warsaw and an India office for Indic language and engineering talent. They have also hinted at expanding to Asia and potentially opening a Bay Area office to tap into Silicon Valley talent.

Key hiring areas:

ElevenLabs is hiring in AI research, engineering (machine learning and audio engineering), product management, and go-to-market roles (sales and partnerships). On the research side, they are expanding their small team of speech scientists and researchers to work on multilingual capabilities, expressiveness, and core model improvements. They also need infrastructure and software engineers to run a platform that handles heavy GPU usage at scale. On the product side, they plan to add product managers and designers to develop user-friendly interfaces for the Studio, mobile app, and other applications. For go-to-market, they are building out enterprise sales, account management, developer relations, and customer success. Ethics, policy, and trust & safety hires are also likely as the regulatory environment for generative AI evolves.

Staniszewski has noted that the team is still too small to tackle everything on the roadmap, which justifies the rapid hiring plan. They aim to maintain high quality of hires and a strong culture while scaling to several hundred employees.

Organizational development:

ElevenLabs has three main offices: London (business HQ), New York (partnerships and US clients), and Warsaw (R&D). The founders split duties, with Mati as CEO focusing on strategy and go-to-market, and Piotr as CTO leading research. They have brought in experienced executives for revenue, sales, and likely more VP or C-level roles will be added, including a possible Head of Engineering, Head of Product, and additional board members.

Culture and talent attraction:

As a well-funded unicorn in the generative AI space, ElevenLabs has momentum for recruiting top talent. Their presence in London and Warsaw taps into strong European engineering pools, while a planned San Francisco presence would help attract US West Coast talent. TIME’s recognition of their CTO among top AI innovators also boosts their reputation among researchers.

Focus areas for R&D hiring:

Priority areas likely include multilingual and low-resource language experts, specialists in speech emotion modeling, signal processing engineers for better audio quality and lower latency, and experts in telephony audio to support telecom partnerships.

Outlook

ElevenLabs is seen as a clear leader in AI voice, with analysts bullish that it could become the Adobe of audio if it sustains explosive revenue growth and locks in big partnerships by 2027. The optimistic case foresees it hitting $300–500M ARR, dominating audiobooks, dubbing, and powering AI assistants, while high margins and a sticky platform could make it hugely profitable. Its head start, brand, and potential integration with Big Tech could protect it from commoditization and amplify its network effects. However, risks loom: stricter regulation, misuse scandals, or backlash from voice actors could slow adoption and raise compliance costs. Big rivals like OpenAI, Google, or AWS could release cheap or bundled alternatives, forcing ElevenLabs to defend its premium position. Lawsuits over training data and talent poaching could also threaten its edge if not managed well. The next two years will reveal if ElevenLabs cements itself as the backbone of AI voice or gets squeezed by regulation and powerful competitors — so revenue growth, major deals, and new product quality will be crucial signals to watch.

Bibliography

Disclaimer

The content of Catalaize is provided for informational and educational purposes only and should not be considered investment advice. While we occasionally discuss companies operating in the AI sector, nothing in this newsletter constitutes a recommendation to buy, sell, or hold any security. All investment decisions are your sole responsibility—always carry out your own research or consult a licensed professional.