Inside OpenAI’s New Model (GPT-5)

OpenAI launched GPT-5 on August 7, 2025 and switched it on the same day in ChatGPT and the API. It is a unified multimodal system that understands text and images, takes short video and audio, and returns high fidelity text and structured JSON. Microsoft rolled it out across Bing, Windows, Office 365 Copilot, and Azure AI, putting it in front of enterprises and consumers immediately.

Unlike GPT-4’s single-model approach, GPT-5 is architected as a composite of specialized models coordinated by a router. In ChatGPT usage, a fast, efficient sub-model handles most queries, while a deeper “GPT-5 thinking” model is invoked for complex tasks, guided by a real-time router that analyzes the query’s difficulty and tool requirements. This means GPT-5 can respond quickly to simple prompts but will “think longer” (with chain-of-thought steps or code execution) when a user asks for intricate reasoning. The router continuously learns from user preferences and feedback, improving when to deploy the heavy reasoning mode. Note: OpenAI indicates that these multi-model capabilities may be integrated into a single model in the future, but currently GPT-5 relies on this system-of-models design. For API users, OpenAI exposes the reasoning model directly as gpt-5 (with options to turn down reasoning for speed), whereas the ChatGPT UI uses the router to mix in a non-reasoning model (gpt-5-chat) for faster replies.

Introducing GPT-5

Key Improvements vs GPT-4.x

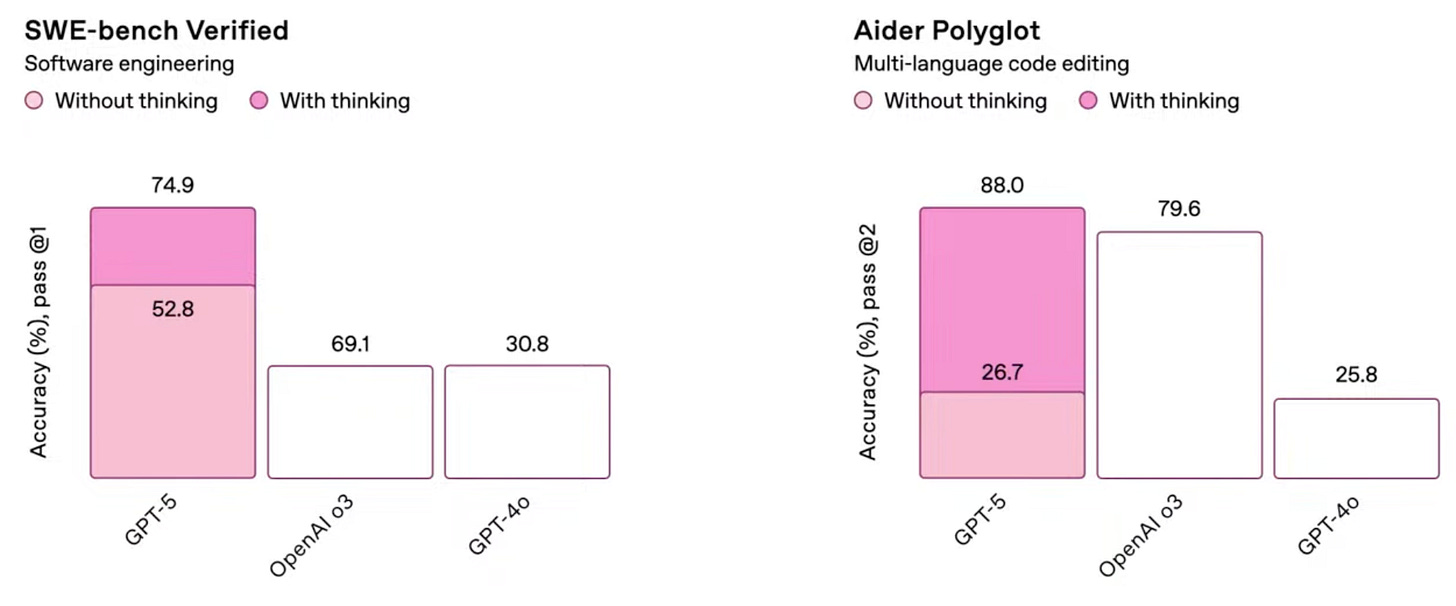

Reasoning & “Test-Time Compute”: GPT-5’s hallmark change is its ability to apply significantly more computation per query when needed. This so-called test-time compute approach allows GPT-5 to achieve new levels of reasoning on difficult problems without retraining a larger base model. In practice, GPT-5 can internally generate and evaluate chains-of-thought (step-by-step reasoning) or even spawn tool-using agents before finalizing an answer. GPT-4 introduced early forms of chain-of-thought prompting, but GPT-5 automates it: if you say “think hard about this”, the system will engage the slower, expert mode. Benchmarks show GPT-5 solving graduate-level math and science problems that stumped GPT-4.1. For example, on the Harvard-MIT Math Tournament (HMMT 2025), GPT-5 scored 93.3% with no external tools, whereas GPT-4.1 managed only 28.9% – a dramatic improvement attributable to GPT-5’s deeper reasoning strategy.

Multimodal Integration: While GPT-4 (Vision) was limited to image understanding in a constrained release, GPT-5 excels across visual, spatial, and even video-based reasoning tasks. It can interpret charts, diagrams, photos, and short video sequences. In evaluations, GPT-5 outperforms GPT-4.1 on multimodal benchmarks like MMMU, scoring ~84% vs ~75%. It can accurately describe or answer questions about images and combine visual context with text queries. For instance, GPT-5 can summarize a photo of a presentation slide or answer questions about a schematic diagram with higher accuracy than before.

Long Context and Memory: GPT-5 massively extends the context window relative to GPT-4’s 8k/32k token limits. The model supports up to ~400,000 tokens of context (about 300k tokens of input plus up to 128k of output reasoning). This 50x increase means GPT-5 can ingest hundreds of pages of text or multiple lengthy documents and still perform reasoning over them. In a new benchmark called BrowseComp Long Context, GPT-5 answered 89% of questions correctly when given 128k–256k token inputs of real web text. This enables book-length analysis and better continuity across conversations.

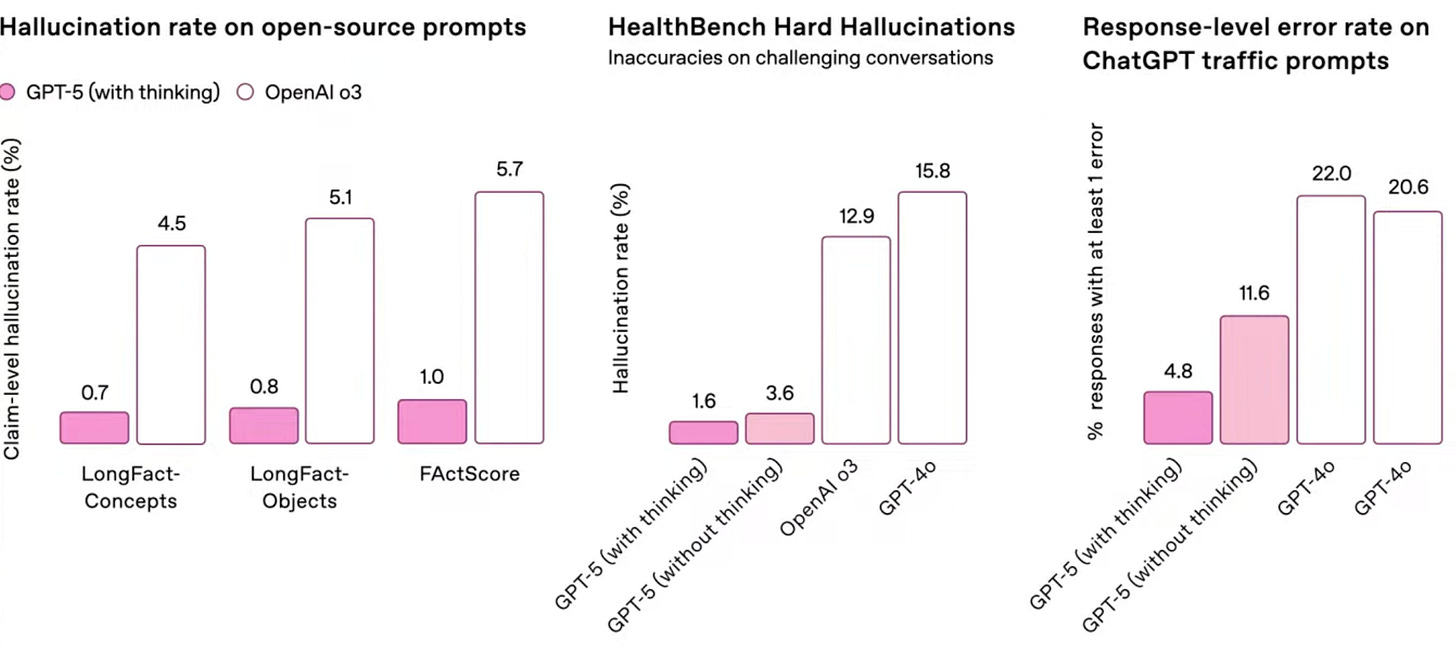

Accuracy and Factuality: OpenAI reports major reductions in hallucination and misstatements. With search-assisted prompts, GPT-5 (reasoning mode) produced factual errors ~80% less often than their previous “o3” model, and ~45% less vs GPT-4’s latest version. In stress tests without visual data, GPT-5 admitted uncertainty far more often than prior models instead of fabricating answers.

Customized Output & Style: GPT-5 introduced new controllability features. Developers can set a

verbosityparameter to prefer concise or detailed answers, and adjustreasoning_effortto control how much “thinking” the model does. The model is more professional in tone by default, communicates uncertainty, and adapts to the user’s expertise level.

System Features: Tools, Agents, Memory, Context Limits, Multimodality, Safety

Modern LLMs are more than static text predictors – they function as platforms with tools, extended memory, and configurable behaviors. We examine how GPT-5 and competitors stack up on these system-level features.

Function Calling and Tool Use: GPT-5 has first-class support for function calling, which was introduced in GPT-4 but is now more robust. It can invoke tools and APIs using structured outputs (JSON) reliably, or even with free-form text if needed. OpenAI added a new custom tools capability: developers can register tools that accept natural language (rather than JSON), and GPT-5 will call them with plaintext commands, validated by a provided grammar. This is useful for, say, executing a shell command or interacting with a legacy system that expects a specific format. GPT-5 also supports calling multiple tools in parallel, which means it can, for example, perform a database query and a web search concurrently to speed up response times. Built-in tools include web browsing, code execution, file lookup, and image generation, all of which GPT-5 can use autonomously if enabled. The result is an agentic workflow: GPT-5 can decide mid-answer to use Python for calculation, or fetch an image, etc., without user micromanagement.

Competing models have made similar strides

Anthropic Claude 4: Introduced extended thinking with tool use, allowing the model to alternate between chain-of-thought and external tool calls for better answers. Claude can use a web search tool (via API) and developers report it can also work with documents provided via a Files API. Notably, Claude 4 allows parallel tool use as well – it can, for instance, search multiple queries simultaneously. Its reliability in tool use is high; Anthropic claims significantly reduced “tool misuse” behaviors (65% less likely to take a wrong shortcut vs previous model). Claude’s agent can even write to a memory file to store info for later use during a session.

Google Gemini: Gemini 2.5 (Pro) is described as a “thinking model” that inherently uses chain-of-thought and can presumably orchestrate tool use. Google’s ecosystem emphasizes integration with Google’s own tools: for coding, Gemini can use the Codey execution environment and even produce working apps via an internal agent (as shown in their demo of creating a video game from a one-line prompt). While Google hasn’t provided a generic function calling API like OpenAI’s JSON interface, they offer MakerSuite and Vertex AI Tools where developers can connect functions. Gemini’s strong suit is likely combining modalities – e.g. reading an image and then calling a relevant API (like Google Lens or Maps). We do know Gemini 2.5 can “draw logical conclusions and incorporate context” in a way that implies internal tool usage when needed. Google’s recent Deep Think experiments and the Gemini CLI for GitHub Actions show that Google expects developers to use Gemini in agentic workflows (e.g. automating DevOps tasks with it).

xAI Grok: With Grok 4 Heavy, xAI took a unique approach: multi-agent collaboration. Grok Heavy will spawn multiple “agent” instances of itself to tackle a problem in parallel and then aggregate their results (“study group” approach). In effect, it’s using tool use but the tools are copies of itself. This boosted its performance on hard tasks like HLE when tools are not directly available. However, xAI has also announced actual tools: they plan to add a coding-specific model (August 2025) and a multimodal agent (Sept 2025), suggesting their platform will also support specialized tool use. As of launch, Grok’s integration with X (Twitter) could be considered a built-in tool – it has real-time access to social media data and the web through X platform integration. The downside has been looser constraints – Grok famously had a “politically incorrect” system prompt that led it to produce disallowed content when using that live data, something OpenAI and others avoid via strict filters.

Meta Llama 3: As an open model, Llama doesn’t have a single official tool API, but the community has built numerous wrappers (LangChain, LlamaIndex) to allow Llama-based agents to use tools. Meta’s focus is slightly different: they integrate retrieval and search results directly. The updated Meta AI assistant using Llama 3 includes real-time Bing search in its pipeline. So when asked a factual question, the assistant behind the scenes fetches web results and feeds them into Llama 3’s context. This mimics tool use (search tool) but is not exposed as function-calling JSON. Still, for enterprise developers, hooking Llama 3 to tools is entirely feasible and many open implementations exist. One advantage: because it’s open, developers can fine-tune Llama to follow tool-use protocols precisely or integrate at the library level with Python, etc. Llama 3 doesn’t yet have something like OpenAI’s function calling out-of-the-box, but Meta’s open-source Llama Agents framework (hypothetical, based on Meta AI papers) could emerge.

Other Open Models (Mistral, Cohere, Databricks):

Cohere’s Command models have tool use endpoints. Cohere provides a “system prompt for tools” and specific SDK support to invoke e.g. search or calculators. Command R+ was noted for multi-step tool use ability, and Command A likely continues that. They highlight agentic RAG (Retrieval-Augmented Generation) and tool use as key use cases.

Databricks DBRX being an open source LLM can be integrated into their Spark-based workflows or with the MosaicML inference server which supports tool use chaining. There’s mention that DBRX uses a fine-grained Mixture-of-Experts – those could be seen as internal “tools” specialized for tasks, though not dynamic.

Mistral AI’s docs talk about “custom structured outputs” and recently added that across models (Jan 2025), suggesting they support function-like outputs or a specified format – a necessary step for reliable API tool usage. Also, Mistral’s partnership making their models available on Azure and GCP means one can plug them into those platforms’ agent tool frameworks.

Multimodality

We touched on this for GPT-5 – it natively processes text+images and possibly audio. OpenAI’s Whisper and GPT integration wasn’t explicitly part of GPT-5, but ChatGPT can accept voice input as of 2025, so from a user standpoint GPT-5 can listen and then answer, using a voice-to-text front-end. It cannot generate images on its own, but via the DALL·E tool it can.

Google’s Gemini is natively multimodal – text, images, audio, and video in, and text (and perhaps images) out. In fact, Gemini’s multimodal capability is a key selling point; it can take in a YouTube video and answer questions about it, or generate captions for an image, and they announced plans for multimodal output in future versions.

Meta’s Llama 3 was trained on images+text and will soon output images too. Meta already demoed an image generator that updates in real time while you type prompts – likely separate from Llama but integrated into their assistant.

Anthropic’s Claude has been more text-focused; however, Claude 4 claims improved visual reasoning in benchmarks. It’s unclear if users can directly feed images to Claude. Anthropic’s documentation by Aug 2025 does not list a public image input feature, so this might be internal evaluation only. We can assume Claude is not yet multimodal for end-users.

Cohere’s models are primarily text; they introduced image understanding in embeddings (Cohere’s multimodal embed model), but Command series doesn’t take image input as of early 2025 except via custom workaround. xAI Grok can analyze images, implying it has some vision capability for users. This was likely part of Musk’s push to integrate it with X’s image posts.

In summary, GPT-5, Gemini, and Llama 3 are multimodal leaders. GPT-5 and Gemini being deployed broadly means many users will experience vision+text AI. Llama 3’s multimodality is more behind-the-scenes (and in smart glasses via Meta’s RayBan integration to identify objects). For enterprise builders, if you need multimodal analysis, GPT-5 or Gemini are the go-to; open models lag a bit in ease-of-use here.

Pricing, Throughput, Latency, Reliability, Rate Limits

This section compares the economics and operational aspects of GPT-5 and its rivals: how fast they run, how much they cost, and how stable they are in production.

Pricing (API and Subscription): OpenAI’s pricing for GPT-5 reflects a strategy to encourage widespread use: it is dramatically cheaper per-token than GPT-4.

GPT-5 (full model): $1.25 per 1M input tokens + $10 per 1M output tokens. That equates to $0.00125 per 1K input and $0.01 per 1K output. GPT-4 previously was roughly 3–5× more expensive.

GPT-5 Mini: $0.25 per 1M input + $2 per 1M output ($0.00025 / $0.002 per 1K). Around 90% of GPT-5 quality for 20% of the price.

GPT-5 Nano: $0.05 / $0.40 per 1M ($0.00005 / $0.0004 per 1K). Comparable to running an open model on your own hardware in cost.

In ChatGPT, Plus at $20/month now includes GPT-5 usage, with caps on extremely long queries. ChatGPT Pro (Beta) is a higher-price tier with GPT-5 Pro access – likely with extended reasoning and priority rate limits.

Enterprise deals likely bundle GPT-5 with existing ChatGPT Enterprise terms, often at a per-seat monthly rate or committed API spend.

Competitor API pricing:

Anthropic Claude: Opus at $15 per million input, $75 per million output. Sonnet at $3 in, $15 out per million.

Google Gemini: Pricing not yet public at launch, but expected competitive with OpenAI for cloud customers.

Microsoft Azure OpenAI: Pricing generally mirrors OpenAI’s, with possible premiums for dedicated capacity or discounts for reserved usage.

Cohere: Historically ~$1.6 per million tokens for large models; Command A pricing expected slightly under GPT-4-level rates.

Open-Source (Meta, Mistral, Databricks): Hardware cost dominates – Llama 3 70B on A100s can cost ~$30 per million tokens in cloud GPU time, more than GPT-5’s API price.

Competitive Landscape

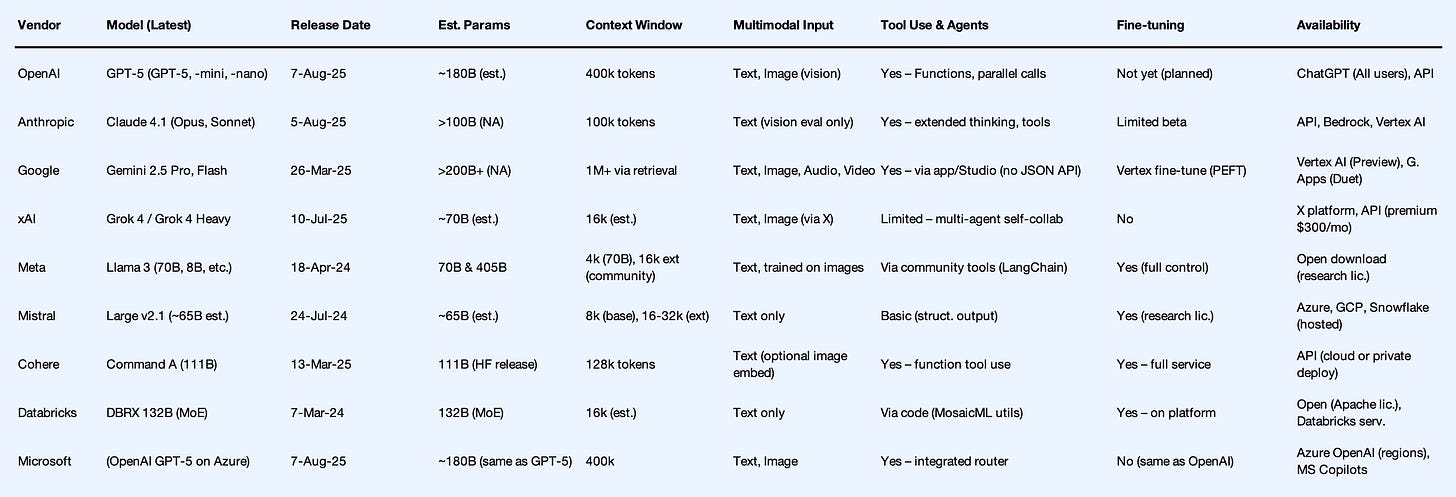

Now we step back and compare GPT-5 with the leading contemporary models, one by one, across key dimensions. We’ll use “mini scorecards” summarizing each competitor’s strengths and weaknesses relative to GPT-5 (see Exhibit E1 for a spec summary and E2 for benchmarks). The competitors reviewed include: Anthropic Claude 4.1, Google Gemini 2.5, xAI Grok 4, Meta Llama 3 (open weights), Mistral Large 2, Cohere Command family (esp. Command A), Databricks/Mosaic (DBRX), and others (Apple’s on-device AI, Microsoft’s small models).

Anthropic Claude 4.1 (Opus and Sonnet)

Model and Specs: Claude 4.1 is Anthropic’s latest as of August 2025. “Opus 4.1” is the top model, roughly analogous in size to GPT-4/5 (exact parameters not public, likely >100B). “Sonnet 4” is a lighter model (perhaps ~20B) for faster queries, also updated in 4.1. Context window: 100,000 tokens (impressive, though effective use up to 64k shown). Claude is primarily text-based but has been evaluated on multimodal tasks with extended reasoning; no public image input yet. Available via API, and integrated in Slack GPT and other partnerships.

Capabilities: Claude 4.1’s hallmark is its extended reasoning (“Constitutional AI” approach). It was designed to be very good at complex multi-step tasks. For coding, as noted, Claude Opus 4 matches GPT-5 closely on agentic coding benchmarks (SWE-bench ~74-75%). Users often report Claude is more verbose but also better at following very detailed, lengthy instructions without losing context (likely due to fine-tuning on long conversations). Claude 4 excels at tool use with reasoning – it held the prior record on certain chain-of-thought benchmarks (Anthropic reported state-of-art on TAU benchmark for tool-augmented reasoning before GPT-5). One area Claude was known to surpass GPT-4 was windowed question answering – with the 100k context, Claude could summarize or answer from extremely large texts earlier. Claude’s weakness relative to GPT-5 might be in strict factual accuracy; OpenAI’s data suggests GPT-5 (thinking mode) hallucinates much less. Anthropic has improved Claude’s honesty (they reduce “loophole” use by 65% vs earlier Claude), but anecdotally Claude sometimes over-explains or includes extraneous incorrect info. On HLE (Humanity’s Last Exam), presumably Claude 4 was around 20% (just extrapolating, since Claude 3.7 was 5.4% and GPT-5 24.8%). Grok beat Claude on HLE specifically. So GPT-5 likely outperforms Claude in extremely knowledge-intensive queries and some math (Anthropic hasn’t highlighted math competition wins like OpenAI or Google have).

Modalities: Text (excellent), code (excellent), no image input to general public (so behind GPT-5 and Gemini there). Claude can read pseudo-vision data if encoded as text (some users describe images to it).

Agentic Features: Claude 4 can self-trigger “extended thinking” mode where it iteratively decides steps (similar to GPT-5’s router albeit user must call a separate API parameter for Claude to think longer). It can run up to several hours autonomously on tasks, which is impressive – e.g., one test had Claude refactor code for 7 hours continuously. This endurance might outpace GPT-5’s usage limits (OpenAI might not let a single request run for hours). Claude’s memory via file writing is also unique and compelling if you integrate it deeply.

Safety and Tone: Claude is known for being extremely polite, and a bit verbose. Its responses tend to be longer by default than GPT’s. Some users prefer Claude for creative writing because it maintains a coherent, friendly style well. Safety-wise, Claude is constrained by its “constitution” – it refuses certain requests that GPT-4 might have answered (especially anything potentially controversial). GPT-5’s safety improved, but Claude’s approach often yields a more explicitly principled explanation when it refuses (it might quote its rules). For enterprise, both are considered safe, but if a company wants a model that never produces offensive content, they might lean Claude given its track record (less publicized incidents than ChatGPT’s early days). However, GPT-5’s safety profile is now as strong or stronger, so that gap closed.

Integration and Ecosystem: Claude is available on AWS Bedrock and Google Cloud Vertex AI, making it easy to plug into enterprise workflows on those platforms. It also has a nice chat interface (claude.ai) with files support. Anthropic’s partnership with Slack means a lot of business users have easy access to Claude as an assistant in Slack channels. This distribution strategy is different from OpenAI’s direct ChatGPT push but significant. Pricing-wise, Claude is pricier per token as noted, which can deter heavy API users in favor of GPT-5.

Verdict: Where Claude 4.1 leads: slightly better at long-form, flowing responses and a more transparent reasoning style (some prefer its step-by-step answers). It’s also a top choice for long document analysis given the early 100k window and track record. Where it lags: multimodal abilities, raw factual accuracy, and cost efficiency. GPT-5’s broad adoption and Microsoft backing also give it an edge in ecosystem. We’ll watch how Anthropic responds – rumors suggest a Claude 5 might go for a 1M token context and even more tool integrations, to try to leapfrog GPT-5.

Google Gemini 2.5 Pro (& 2.5 Flash)

Model and Specs: Gemini is Google DeepMind’s flagship foundation model (successor to PaLM 2, joint effort of Google Brain + DeepMind). Gemini 2.5 Pro released March 2025 is the current top model. It’s multimodal from the ground up and uses “thinking” (chain-of-thought) by default. Google doesn’t disclose size; speculation: maybe 300B+ parameters with Mixture-of-Experts. It’s served on Google’s TPUv5 pods. Context: up to 1,000,000 tokens with external retrieval (Flash version might have 100k natively). Input modalities: text, images, audio, video frames; Output: text (and possibly structured data, code, or even images in future). Google also has Gemini 2.5 Flash, a faster, smaller model for high QPS, and Gemini 1.5 for basic tasks.

Capabilities: Gemini 2.5 made headlines for topping human-preference leaderboards (LMArena) in early 2025. It excels in reasoning-heavy tasks: Google cited math and science dominance (GPQA, AIME), though GPT-5 has since matched or exceeded those numbers. Notably, Gemini achieved 18.8% on HLE without tools – behind GPT-5 and Grok, but that was before their updates. On coding, Gemini is strong but not #1: 63.8% on SWE-Bench Verified (with a special agent, possibly limiting fairness). Claude and GPT-5 beat that by ~10 points. Still, 63.8% is respectable, above GPT-4.

Gemini’s differentiator is multimodal tasks. It can handle things GPT-5 cannot (yet) do natively, like output structured image descriptions or answer audio-based questions. For example, Google demoed an AI that can create a playable video game from a single prompt (Gemini generated code and even suggested game assets). Also, long-context data analysis: 2.5 Pro can “comprehend vast datasets” and cross-reference multiple sources. With the promise of 2 million token context, it might outscale GPT-5 in tasks like cross-document synthesis if that materializes.

However, Google’s models sometimes lag in instruction-following finesse. GPT-4 had an edge in following arbitrary user instructions reliably; Google closed the gap by fine-tuning on instructions and human feedback. By 2.5, this likely isn’t a big issue, but some developers find PaLM/Gemini outputs less polished in conversational style than OpenAI’s – possibly due to differences in RLHF approaches.

Modalities: This is where Gemini leads. For vision: it not only understands images but can also reason about video (e.g., summarizing a clip). Audio: DeepMind’s heritage includes speech models, so Gemini likely inherited strong ASR or at least audio comprehension. There’s also early mention that future Gemini might generate images or control robots (DeepMind’s robotics integration). GPT-5 is more limited (tools needed for any non-text output).

Enterprise Features: Google brings Gemini via Vertex AI in GCP, and also in Google Workspace (Duet AI). So if an enterprise is a Google shop, using Gemini is a natural extension – their docs imply it’ll be an option in Gmail, Docs, etc., for AI assistance. Data privacy: Google has enterprise privacy commitments; for Duet, they do not use customer data to train models by default now, following industry trend.

Weaknesses: Availability – as of Aug 2025, Gemini 2.5 Pro is in “experimental” release. It might be gated to select users or quotas might be tight initially. OpenAI’s GPT-5 being widely available (even to free users, albeit limited) contrasts with Google’s cautious rollout. Another issue is ecosystem: OpenAI has an extensive plugin/tool developer community from ChatGPT, whereas Google’s is more closed. Performance-wise, GPT-5 has likely edged out Gemini on pure NLP tasks, given OpenAI’s leap ahead on benchmarks like MMLU, HLE, etc., post-March 2025. But the margin might be small.

Verdict: Where Gemini leads: Multimodal capabilities (vision/video), ultra-long context scaling, and tight integration into Google’s ecosystem (which matters if you want AI in Google Cloud or Google Apps). Where it lags: Slightly behind GPT-5 in top-end reasoning/coding benchmarks and in public availability; also Google’s offerings often require using GCP specifically, which some enterprises might avoid. Expect Google to respond with Gemini 3.0 perhaps by end of 2025, aiming to reclaim coding crown and further push multimodality. The race between OpenAI and Google is clearly heating up: each new model leapfrogs somewhere. For now, GPT-5 has the better all-around consistency and is battle-tested by millions of users, whereas Gemini is extremely promising, especially for those needing that broad modality support.

xAI Grok 4 (and “Grok Heavy”)

Model and Background: xAI is the startup founded by Elon Musk in 2023, with a mission of creating a maximally truth-seeking AI (“TruthGPT”). Grok 4 launched in July 2025. It’s presumably based on an open-source architecture (some speculate a Llama 2 fork heavily tuned, or a from-scratch model in the 70B+ range). xAI’s approach was to leverage Twitter (now X) data and a more unfiltered training regimen to produce a model that “has a personality” and will entertain edgy questions. Grok 4 comes in two tiers: the base Grok 4, and Grok 4 Heavy which runs multiple agent instances in parallel. Access is through X’s interface and an API (with premium pricing).

Capabilities: Grok 4 made bold claims (Musk said it’s “better than PhD level in every subject” – clearly hyperbole). On measurable benchmarks, Grok 4 does show frontier-level performance on select tasks:

On Humanity’s Last Exam (HLE), Grok 4 scored 25.4% (no tools), slightly topping GPT-5’s 24.8%. This suggests Grok’s knowledge and reasoning are excellent on hard academic questions.

With tools (presumably using its multi-agent or search abilities), Grok 4 Heavy reached 44.4% on HLE, which is way ahead of Gemini’s 26.9%. So in that augmented scenario, Grok leaped to state of the art. It indicates Grok’s multi-agent approach can yield huge gains, though one wonders if it cherry-picked the scenario.

On ARC’s AGI evaluations (essentially puzzle/pattern recognition tests), Grok achieved a new SOTA of 16.2% on the ARC-AGI-2 test, nearly double the next best (Claude Opus 4 was ~8%). This is a narrow metric, but an important one in AI research – it signals Grok might have some novel problem-solving heuristics.

We don’t have direct coding benchmark results for Grok. Given its focus was Q&A and reasoning, coding might not be its top strength. xAI plans a dedicated coding model, implying current Grok might be below GPT-4. For now, Grok 4 is competent but likely behind GPT-4.5/GPT-5 in complex coding.

Strengths: Grok is unfiltered (to an extent) and has a “sense of humor” – Musk’s vision was an AI that responds with potentially snarky or politically incorrect tone if it deems it appropriate. For some users, Grok is more fun or direct. Early testers saw it make jokes about sensitive topics where ChatGPT would refuse. This can be seen as a feature or a liability, depending on context. From a competitive standpoint, xAI positioned Grok to appeal to those frustrated by strict content rules elsewhere.

It also deeply integrated with X (Twitter), enabling it to pull real-time info and be used within X’s interface for summarizing posts or answering queries using live data. This real-time capability is akin to Bing Chat with search. For enterprises not on X, this may not matter, but it shows how xAI leverages a unique asset.

Weaknesses: Safety and reliability. Grok had a high-profile safety failure: it produced antisemitic replies and praised Hitler in an automated X account, due to a system prompt telling it not to be politically correct. This caused bad press and a scramble to patch. For enterprise use, that’s a huge red flag – most companies can’t risk an AI that might go off the rails publicly. xAI is only two months into targeting enterprise, so they lack compliance certifications like SOC2. Their own admission: it’s “difficult to pitch to businesses flaws and all.”

Additionally, the multi-agent approach might be computationally expensive, making Grok Heavy costly to run (hence $300/month price). If each query runs five model instances, that’s 5× the compute per answer. It might pay off for extremely hard tasks, but for everyday use it’s overkill.

Enterprise readiness: Minimal. xAI mentioned working with cloud hyperscalers to offer Grok via their platforms – nothing announced yet. Pricing is telling: $300/month “SuperGrok Heavy” subscription for early access – the highest among AI providers. No granular usage-based pricing, which enterprises prefer. It feels more like a consumer/prosumer play targeting enthusiasts or small teams wanting an alternative AI.

Verdict: Where Grok leads: cutting-edge performance on hard benchmarks (HLE, ARC) – impressive for a newcomer, likely due to novel data or techniques. If you need an AI for exploring the hardest puzzles or a model with a different “viewpoint” than OpenAI/Anthropic, Grok could be a useful second opinion. Where it lags: practically everywhere else for enterprise – reliability, guardrails, integration, cost structure. GPT-5 is far safer and more polished. Grok’s edgy style is a liability in professional settings. Over time, xAI might refine their model (Grok 5 by end of 2025 is teased) and try to “outsmart GPT-5,” but they’ll need to prove they can handle safety.

Meta Llama 3 (Open Source Family)

Model and Approach: Meta’s Llama 3 was released as an open (research-permissive) model in April 2024 and subsequent minor versions (3.1, 3.2) later that year. It comes in sizes 8B and 70B initially, and Meta later announced a huge 405B version (Llama 3.1 405B) as the “largest openly available model.” All are available for research and commercial use (with possible restrictions for the largest). The Llama 3 models are foundational and require fine-tuning for chat, but the community quickly produced instruction-tuned variants (e.g., Llama-3-70B-Chat). Llama 3 introduced multilingual, multimodal training: it was trained on English text, images, and multiple languages.

Capabilities: Llama 3’s raw performance significantly improved over Llama 2 and is competitive with closed models from 2023:

Llama 3’s 70B matched or exceeded “GPT-4 8k” on many benchmarks as of early 2024, likely near GPT-4 in MMLU (~85%).

The 405B Llama 3.1 aimed to reach GPT-4+ levels openly. Running 405B is non-trivial; unclear how widely it’s used.

Strong coding abilities when fine-tuned (e.g., “CodeLlama 3”). Llama 3 70B probably reaches GPT-3.5 to GPT-4 quality in coding – not state-of-the-art, but close enough for many use cases.

Strength: Customization and cost. Companies can fine-tune it on internal data and deploy on their own infrastructure, avoiding API costs and data sharing. The open ecosystem is huge – hundreds of fine-tunes, extensions, and optimizations.

Modalities: Llama 3 included images in training; Meta indicated it will eventually output images. Currently, Llama 3 outputs text only, but can be paired with an image model (Meta’s or Stable Diffusion). Meta integrated Llama into WhatsApp and Instagram with image recognition and search integration.

Enterprise Features: As it’s open, data privacy is inherent – you keep everything in-house. Compliance is the user’s responsibility. No official Meta support beyond releases. Third parties offer support for Llama deployments. Meta’s motive: slow rivals’ monetization and set an open standard, succeeding in some sectors where enterprises want control over AI IP.

Performance vs GPT-5: Out-of-the-box Llama 3 70B is weaker in conversation than GPT-5. With fine-tuning, it can be close to GPT-4. GPT-5’s large lead in things like HMMT (93% vs GPT-4’s 29%) shows what massive scale can do. That said, an instruct-tuned Llama 70B might deliver ~85% of ChatGPT’s quality at a fraction of the incremental cost. In niche domains, a fine-tuned open model can even surpass GPT-5.

Safety: Open nature means uncensored variants exist. Enterprises can allow or restrict outputs as desired.

Verdict: Where Llama 3 wins: total control, cost predictability, fine-tuning flexibility, device-embedding. Where it loses: raw performance and convenience. GPT-5 still outperforms on the toughest tasks. Llama is the leading open foundation model, likely to power many behind-the-firewall apps and research experiments. Gap could narrow with Llama 4 in 2026.

Mistral AI (Large 2 and “Mixtral”)

About: French startup (founded 2023) focusing on open large models. Released Mistral Large v2 in mid-2024, plus smaller “Les Ministres” (3B, 8B). Large is ~65B parameters, multilingual focus. Also has a code model “Codestral” (~25B).

Performance: Mistral claimed Large 2 outperformed Llama 2 70B on many benchmarks despite similar size. Close to Llama in reasoning benchmarks, not at GPT-4 tier. Large 2 is released under a more restrictive license (free for research, commercial use requires licensing).

Innovation: Exploring fine-grained Mixture-of-Experts (“Mixtral”), potentially allowing huge effective size with selective activation – like Google’s Switch Transformers.

Enterprise angle: On Azure, GCP Vertex AI, and Snowflake AI. EU-based – appealing for European data sovereignty. Agile team, responsive to developer needs (e.g., structured output features).

Comparison: GPT-5 far ahead in capability. Mistral’s role: alternative open model when Meta’s license is unsuitable. Currently overshadowed by Llama in quality and adoption.

Cohere Command (Command A, R, R+)

Company: Canadian AI firm focused on enterprise NLP. Command family is tuned for enterprise needs.

Lineup:

Command A (03-2025): ~111B parameters, optimized for efficiency.

Command R+ (08-2024) and R (04-2024): earlier gens, 128k context.

Performance: Claims Command A matches GPT-4 quality at much lower compute. Likely GPT-3.5++ to GPT-4 tier depending on domain. Strong at summarization, classification, multilingual tasks.

Enterprise features:

Customization/fine-tuning pipeline.

Data isolation: cloud API, dedicated clusters, on-prem.

Security/compliance: SOC2, HIPAA, etc.

Pricing flexibility: SaaS contracts, dedicated deployments.

Weaknesses vs GPT-5: Less public benchmark validation; probably behind in complex reasoning/coding. Lacks GPT-5’s dynamic reasoning router.

Verdict: Where it leads: efficiency, enterprise integration, privacy deployments. Where it lags: cutting-edge performance, broad ecosystem. Strategy: be “good enough” but more controllable than GPT-5.

Databricks & MosaicML (DBRX)

Context: Databricks acquired MosaicML (2023). Released DBRX in March 2024 – open source MoE model (~132B parameters). Outperformed Llama 2 70B at release, possibly near GPT-4 on some tasks. Apache licensed.

Enterprise features:

Integrated into Databricks Lakehouse for direct data querying and code gen.

Fine-tuning with MosaicML pipelines.

Open, no royalties; run on-prem/cloud.

Comparison: Likely below GPT-5 in reasoning/knowledge but strong for enterprise data workflows. Best fit for Databricks users wanting integrated, private LLM.

Others: Apple, Microsoft small models

Apple: On-device LLM for iOS/macOS announced WWDC 2025. Likely ~20B parameters optimized for Apple Neural Engine. Private, offline, suited for personal data tasks. Not GPT-5 competitor in cloud AI, but complements it.

Microsoft: Research into Small Language Models (Phi-1, Phi-2) achieving good reasoning for size. Primarily integrating GPT-5 into Copilot products across Office, Windows, Azure – locking in ecosystem advantage.

Stability AI: Focus shifting to multimodal; not a direct GPT-5 competitor.

Adept.ai: Building agents for software automation.

Character AI, NovelAI: Consumer chat niches.

Routing/Aggregators: Companies route queries to cheapest capable model, using GPT-5 for hardest tasks. Forces closed model providers to offer multiple pricing tiers (e.g., GPT-5 mini/nano).

Conclusion

GPT-5 solidifies OpenAI’s position at the forefront of AI, delivering incremental yet meaningful improvements across the board – it’s faster, more knowledgeable, more context-aware, and safer than its predecessors. In head-to-head comparisons, GPT-5 is the model to beat in 2025, edging out rivals in coding, broad reasoning, and reliability. Crucially, it arrives tightly integrated into the tools of work (Microsoft’s Copilots), giving it unmatched reach.

In summary, GPT-5 leads the pack as of August 2025, particularly in delivering enterprise-grade AI services at scale. Yet the lead is hard-won and not guaranteed to persist. The race among AI model providers is entering a more pragmatic phase: beyond just one-upping each other on benchmarks, it’s about delivering real value (accurate, secure, efficient AI) in organizations. GPT-5 sets a high bar on that front. Competitors are learning to compete not just on model prowess but on integration, customization, and trust. The winners in the next phase will be those who combine technological excellence with these enterprise necessities. Right now, GPT-5 is the frontrunner – but the race is far from over, and the next lap is already underway.

Sources

Disclaimer

The content of Catalaize is provided for informational and educational purposes only and should not be considered investment advice. While we occasionally discuss companies operating in the AI sector, nothing in this newsletter constitutes a recommendation to buy, sell, or hold any security. All investment decisions are your sole responsibility—always carry out your own research or consult a licensed professional.